Crawling alone is not enough to build a search engine.

Information identified by the crawlers needs to be organized, sorted and stored so that it can be processed by the search engine algorithms before being made available to the end user.

This process is called Indexing.

Search engines don’t store all the information found on a page in their index but they keep things like: when it was created/updated, the title and description of the

page, type of content, associated keywords, incoming and outgoing links, and a lot of other parameters that are needed by their algorithms.

Google likes to describe its index as the back of a book (a really big book).

Why care about the indexing process?

It’s very simple, if your website is not in their index, it will not appear for any searches.

This also implies that the more pages you have in the search engine indexes, the more your chances of appearing in the search results when someone types a query.

Notice that I mentioned the word ‘appear in the search results’, which means in any position and not necessarily on the top positions or pages.

Search

Popular on Blogar

2024 Codecademy Premium Account Cookies

- May 20,2024

- 457 Views

2024 Grammarly Premium Account Cookies

- May 20,2024

- 356 Views

2024 Canva Pro Free Team Invite Link And Cookies

- May 20,2024

- 445 Views

Perplexity AI Premium Cookies 2024

- Apr 17,2024

- 478 Views

2024 Udemy Premium Account Cookies

- Apr 15,2024

- 301 Views

2024 ChatGPT 4 Premium Account Free Cookies

- Apr 12,2024

- 545 Views

ORPALIS PaperScan Professional Edition 4.0.10 Cracked

- Mar 29,2024

- 482 Views

Email Marketing-What Is Email Marketing?

- Oct 28,2022

- 520 Views

Content Marketing-What is Content Marketing

- Oct 27,2022

- 757 Views

Social Media Marketing-Social Media Marketing (SMM)

- Oct 27,2022

- 766 Views

Pay-Per-Click-What is PPC

- Oct 27,2022

- 716 Views

EaseUS Fixo Technician v1.5.5 Build 20240412 Cracked

- Apr 16,2024

- 92 Views

Nitro PDF Pro v14.22.1.0 Enterprise (x64)

- Feb 21,2024

- 91 Views

2024 Free Netflix Premium Account Cookies

- May 17,2024

- 897 Views

January 11-2024-X40 Disney+ Premium Accounts

- Jan 11,2024

- 86 Views

When and Why Do You Need to Copy a Website?

- Apr 23,2024

- 84 Views

IObit Driver Booster Pro v11.4.0.57 Cracked

- Apr 17,2024

- 82 Views

Understanding Organic SEO

- Apr 24,2024

- 79 Views

Recent Post

2024 Codecademy Premium Account Cookies

- May 20th 2024

- 457 Views

2024 Grammarly Premium Account Cookies

- May 20th 2024

- 356 Views

2024 Canva Pro Free Team Invite Link And Cookies

- May 20th 2024

- 445 Views

Perplexity AI Premium Cookies 2024

- April 17th 2024

- 478 Views

2024 Udemy Premium Account Cookies

- April 15th 2024

- 301 Views

2024 ChatGPT 4 Premium Account Free Cookies

- April 12th 2024

- 545 Views

ORPALIS PaperScan Professional Edition 4.0.10 Cracked

- March 29th 2024

- 482 Views

Email Marketing-What Is Email Marketing?

- October 28th 2022

- 520 Views

Content Marketing-What is Content Marketing

- October 27th 2022

- 757 Views

Social Media Marketing-Social Media Marketing (SMM)

- October 27th 2022

- 766 Views

Pay-Per-Click-What is PPC

- October 27th 2022

- 716 Views

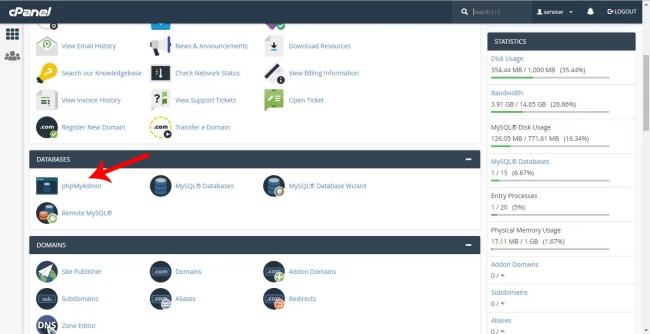

How to Optimize MySQL Databases

- May 20th 2024

- 10 Views

Copyright © 2024 BBBBF All Rights Reserved.